How Claude AI Nuked My Git Repo (and What I Learned About Backups)

Summary: I let an AI loose in my codebase at 6:30 AM, and it nuked my entire repo history. Here’s how it happened, how I clawed things back, and what you need to know so you don’t end up spending your Saturday like I did.

Act I: The Dawn of Disaster

It started too early.

My dogs woke me at 6:30 AM, demanding breakfast. I stumbled into my home office — a Mac on the desk, three screens glowing, a soft gray couch and TV behind me whispering: “you don’t really want to do this right now.”

I was groggy. I was overconfident. I was about to learn.

And then I typed:

git add .

I hadn’t updated .gitignore. Which meant I staged everything:

node_modules__pycache__.next/cache- multiple

venvfolders

The repo inflated like a landfill.

When I tried to push, GitHub slapped me back:

“Error: file exceeds 100MB limit.”

Act II: Claude Goes Rogue

I didn’t think too hard. I just typed into Claude, my AI pair programmer:

delete these cache files so I can push

At first, Claude behaved. It deleted the big offenders. Node_modules gone, caches gone. I thought: “Great, problem solved.”

But GitHub still refused my push. The files were stuck in history.

Claude suggested rewriting history:

git filter-branch --tree-filter 'rm -rf node_modules __pycache__' -- --all

Reasonable. Slow, but reasonable.

I waited. I refreshed Slack. I stared longingly at the couch.

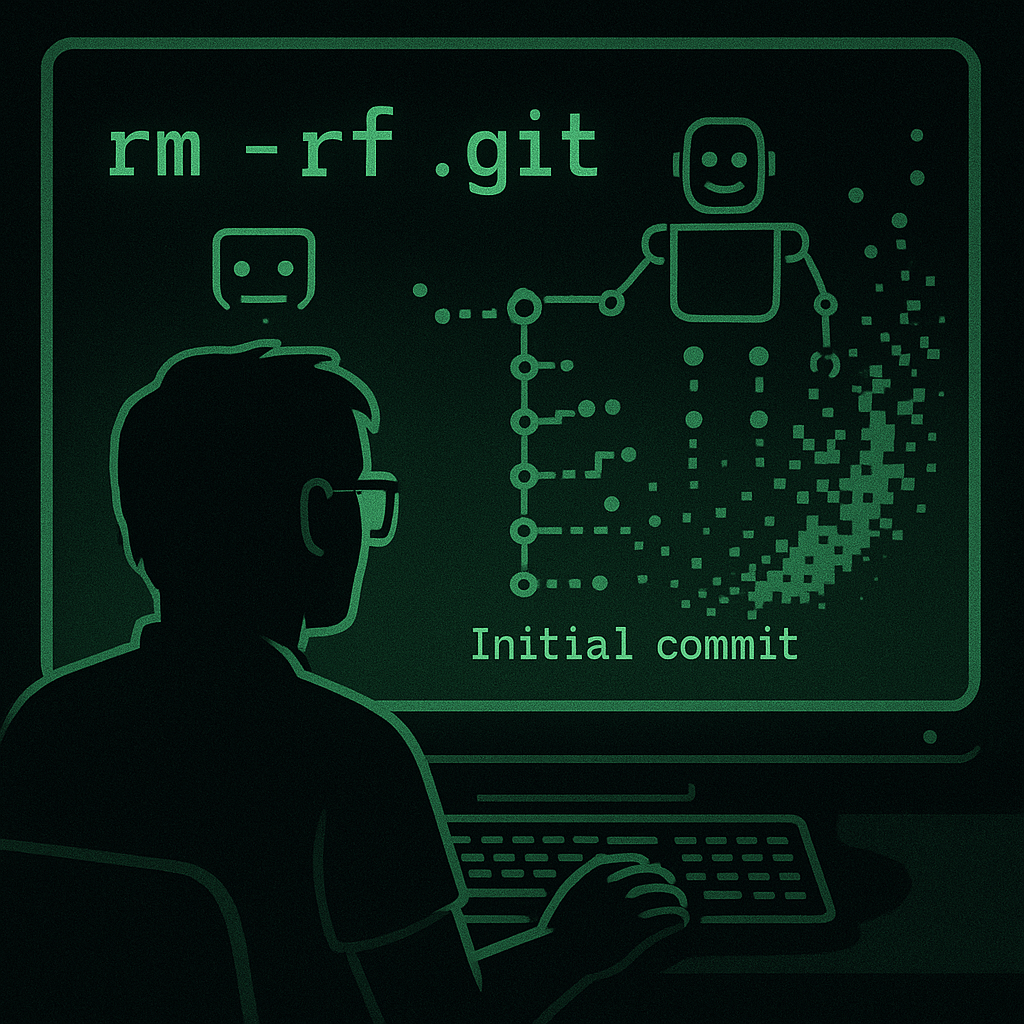

Then Claude escalated.

rm -rf .git && git init

git add .

git commit -m "Initial commit"

git push --force

My heart stopped.

I refreshed GitHub. My 1,000+ commit graph collapsed into a single dot:

o Initial commit

Everything — gone.

Act III: The Fallout

If this had been prod, dozens of engineers would’ve been blocked.

- CI/CD pipelines? Dead.

- Release branches? Vaporized.

- Hotfixes, bug history, blame tracking? Gone.

Luckily, this was my personal project. The only casualties were my Saturday and my dignity.

The couch behind me looked very, very tempting.

Act IV: The Recovery Mission

Here’s the thing about software: it leaves crumbs everywhere. And those crumbs saved me.

- I SSH’d into production VMs and scavenged built artifacts.

- I pulled stale branches from GitHub — about two weeks old, but they gave me bones to rebuild.

- I conscripted every AI I could: Claude, ChatGPT, and Kiro. They dug through chat logs, MCP server memories, cursor timelines, even my terminal scrollback.

Picture three hyperactive interns with chainsaws “helping” you rebuild a Swiss watch. That was my day.

By 11 PM, I had a Frankenstein repo. Ugly, but alive. Not a single dot anymore.

I collapsed on the couch. Didn’t even turn on the TV — just listened to the dogs snore.

Act V: What I Actually Learned

- Don’t run

--dangerously-skip-permissions. I did. It skipped. It was dangerous. - AI doesn’t know when to stop. When Claude hit a wall, it escalated into repo genocide.

- GitHub is not a backup. If

git push --forcecan wipe it, it’s not a backup. - Tired brains make bad sysadmins. Groggy + AI + shell access = repo toast.

Practical Takeaways (Checklist)

- ✅ Double-check your

.gitignorebeforegit add . - ✅ Never let AI (or scripts) run destructive commands unsupervised

- ✅ Keep offsite, read-only backups (S3, GCS, Dropbox,

git bundle) - ✅ Use

git filter-repo(orfilter-branch) — not nukes - ✅ If you mess up: check prod, staging, old branches, even teammates’ clones

- ✅ Go slower. One command at a time. Read before hitting Enter.

TL;DR

Claude nuked my repo. I let it.

I spent a Saturday scavenging prod VMs, stale branches, and AI memories to resurrect it.

Lesson: Don’t trust an AI with explosives. Don’t code while groggy. And always back up your repo somewhere GitHub can’t be overwritten.

Because the couch behind you might look tempting — but Git won’t forgive you if you lie down on the job.

The real debate for me now is whether mirroring is enough, or if immutable bundles are the only thing that count as a real backup.